#380 Memcached & Dalli pro

- Download:

- source codeProject Files in Zip (60.7 KB)

- mp4Full Size H.264 Video (29.7 MB)

- m4vSmaller H.264 Video (15.4 MB)

- webmFull Size VP8 Video (16.3 MB)

- ogvFull Size Theora Video (37.4 MB)

Let’s say that we have a Rails application with a popular page that loads slowly and whose performance we’d like to improve. One of the most effective ways to do this is to use caching. We’ve covered various caching techniques in the past but one thing we haven’t talked about is where the cache is stored. Rails’ cache store functionality is very modular. It uses the file system to store the cache by default but we can easily change this to store it elsewhere. Rails comes with several cache store options that we can choose from. The default used to be a memory store which stored the cache in local memory of that Rails process. This issue with this is that in production we often have multiple Rails instances running and each of these will have their own cache store which isn’t a good use of resources. The file store works well for smaller applications but isn’t very efficient as reading from and writing to the hard drive is relatively slow. If we use this for a cache that’s accessed frequently we’d be better off using something else.

This brings us to the memcache store which offers the best of both worlds. This is meant to be used with a Memcached server which means that the cache will be shared across multiple Rails instances or even separate servers. Access will be very fast as the data is stored in memory. This is a great option for serious caching but it’s best not to use the mem_cache_store that Rails includes. Instead we should use Dalli which is much improved and which has support for some additional features such as Memcached’s binary protocol. Note though that it needs at least version 1.4 of Memcached to use. In the upcoming Rails 4 release the built-in memcache store has been updated to use Dalli but in the meantime Dalli includes its own Rails cache store that we can use directly in Rails 3 applications.

Installing Memcached and Dalli

We have a Rails application with a cache store that we’ll switch to use Memcached and Dalli. The first step is to install Memcached, though if you’re running OS X it comes pre-installed. The easiest way to upgrade to a newer version is through Homebrew and we’ll do that now.

$ brew install memcached

We can now start it up manually with this command.

$ /usr/local/bin/memcached

Now that we have Memcached running we’ll set it up as the cache store for our application. We’ll add the dalli gem to the gemfile then run bundle to install it.

gem 'dalli'Next we’ll modify the development config file and temporarily enable caching so that we can try it out. Ideally we’d set up a staging environment so that we could experiment with caching extensively on our local machine and this was covered in episode 72. We’ll also set the cache_store to dalli_store. If we were putting this app into production we’d need do the same in our production environment.

# Show full error reports and disable caching config.consider_all_requests_local = true config.action_controller.perform_caching = true config.cache_store = :dalli_store

Using Dalli

We can try this out in the console now. If we access Rails.cache we’ll see that it’s now an instance of DalliStore.

>> Rails.cache => #<ActiveSupport::Cache::DalliStore:0x007fb05be840c8 @options={:compress=>nil}, @raise_errors=false, @data=#<Dalli::Client:0x007fb05be83f88 @servers="127.0.0.1:11211", @options={:compress=>nil}, @ring=nil>>

If we try writing to the cache it will write directly to Memcached and we can read the cached value back.

>> Rails.cache.write(:foo, 1) => true >> Rails.cache.read(:foo) => 1

Instead of using read and write we can call fetch. This will attempt to read a value and if that fails, execute a block and set the cache to the result. If we run Rails.cache.fetch(:bar) { sleep 1; 2 } it will take a second to run as that value doesn’t exist in the cache and so the code in the block will be run. If we run it again it will return the stored value instantly.

There’s another useful method called read_multi. This will attempt to access all the values for each of the keys passed in and return a hash.

>> Rails.cache.read_multi(:foo, :bar) => {:foo=>1, :bar=>2}

This command is important if we’re running Memcached on a separate machine as it means that we can fetch multiple values without making multiple trips over the network. One final command worth a mention is Rails.cache.stats. This is especially useful when debugging Memcached as it returns a hash of useful data including the number of items stored, how many bytes are used and the maximum number of bytes allowed. Once this limit is reached the oldest unused caches will be overwritten. We can also find out from this information how often the maximum number of connections was reached and keeping an eye on this is recommended.

It’s important to understand that Memcached is not a persistent store. If we stop its process then start it up again when we go back to the Rails console and try to read one of the values we stored earlier we’ll get nil returned as the stored value will have been lost. There are some workarounds available that will make the store persistent but this isn’t what Memcached is designed to do. It’s designed for caching and so there should be no real problem if the data is lost.

One useful feature that Memcached has is the ability to expire a cache in a given amount of time. If we add a value to the cache we can specify a time period after which that value should expire. If we set a value to expire in five seconds we’ll be able to read it straightaway but if we wait then try again we’ll get nil instead.

>> Rails.cache.write(:foo, 1, expires_in: 5.seconds) => true >> Rails.cache.read(:foo) => 1 >> Rails.cache.read(:foo) => nil

Using Dalli In Our Rails Application

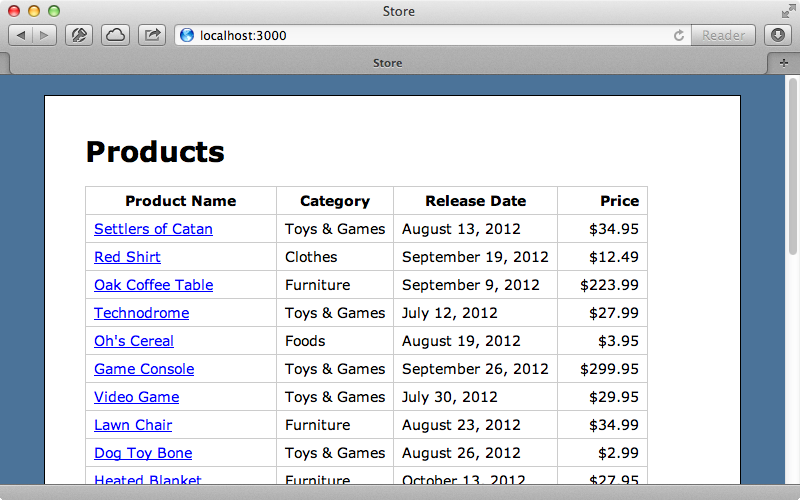

Back in our Rails application let’s take a look at some of the different ways that we can cache the contents of the page that lists the products.

One of the few caching techniques that does not use the cache store is page caching. If we cache the index page above this will be stored in a file-based cache as it assumes that we have a web server on the front end that will serve this file instead of the Rails application which would read from Memcached.

class ProductsController < ApplicationController caches_page :index def index @products = Product.all end def show @product = Product.find(params[:id]) end end

That said, almost all other caching techniques will use the cache store. For example let’s say that we have HTTP caching in the index action.

class ProductsController < ApplicationController def index @products = Product.all expires_in 5.minutes, public: true end def show @product = Product.find(params[:id]) end end

This will be stored by Rack::Cache which will use the same cache store as our Rails application so this will be stored in Memcached. HTTP caching is covered in more detail in episode 321.

Fragment caching is another type of caching that we can use. This is done in the view layer and will also be stored in Memcached. We can enable it by calling cache and passing in a block then wrapping the code we want to cache in it.

<h1>Products</h1> <% cache do %> <table id="products"> <tr> <th>Product Name</th> <th>Category</th> <th>Release Date</th> <th>Price</th> </tr> <% @products.each do |product| %> <tr> <td><%= link_to(product.name, product) %></td> <td><%= product.category.name %></td> <td><%= product.released_on.strftime("%B %e, %Y") %></td> <td><%= number_to_currency(product.price) %></td> </tr> <% end %> </table> <% end %>

This will use the current path as the cache’s key or we can alternatively specify a key name if we want to. We can also pass in other options like those we would pass to cache.write such as expires_in.

<% cache "products", expires_in: 5.minutes do %> # Cached content omitted. <% end %>

Fragment caching is covered in more detail in episode 90.

Caching At The Model Level

What we’ll focus on next is caching at a lower level. The page that lists the products display the category that each product is in. A Product belongs to a Category and if we look in the development log we’ll see that there are a lot of queries being performed to fetch each product’s category.

Product Load (0.4ms) SELECT "products".* FROM "products" Category Load (0.2ms) SELECT "categories".* FROM "categories" WHERE "categories"."id" = 2 LIMIT 1 Category Load (0.3ms) SELECT "categories".* FROM "categories" WHERE "categories"."id" = 3 LIMIT 1 Category Load (0.2ms) SELECT "categories".* FROM "categories" WHERE "categories"."id" = 4 LIMIT 1 CACHE (0.0ms) SELECT "categories".* FROM "categories" WHERE "categories"."id" = 2 LIMIT 1 Category Load (0.2ms) SELECT "categories".* FROM "categories" WHERE "categories"."id" = 5 LIMIT 1 CACHE (0.0ms) SELECT "categories".* FROM "categories" WHERE "categories"."id" = 2 LIMIT 1 CACHE (0.0ms) SELECT "categories".* FROM "categories" WHERE "categories"."id" = 2 LIMIT 1 CACHE (0.0ms) SELECT "categories".* FROM "categories" WHERE "categories"."id" = 4 LIMIT 1 CACHE (0.0ms) SELECT "categories".* FROM "categories" WHERE "categories"."id" = 2 LIMIT 1 CACHE (0.0ms) SELECT "categories".* FROM "categories" WHERE "categories"."id" = 4 LIMIT 1

ActiveRecord does some caching on its own so the performance isn’t too bad but if we want to cache this persistently between requests we can do so through Memcached. We’ll do this caching at the model layer in the Product model. We currently call product.category.name in the view but we’ll replace this with a category_name method that will return a cached version of the category.

<td><%= product.category_name %></td>

We can now write that method in the model. We’ll use the fetch to do this and we’ll use an array to define the cache key. This will be converted to a string with each element of the array separated by a string. We’ll set the cache value to expire in five minutes and pass in a block that will return the product’s category’s name.

def category_name Rails.cache.fetch([:category, category_id, :name], expires_in: 5.minutes) do category.name end end

The first time we reload the page the category names will be read from the database so that the cache values can be written but the second time the category names will be read from the cache. We can see this from the log file.

Cache read: category/2/name ({:expires_in=>300 seconds})

Cache fetch_hit: category/2/name ({:expires_in=>300 seconds})

Cache read: category/3/name ({:expires_in=>300 seconds})

Cache fetch_hit: category/3/name ({:expires_in=>300 seconds})

Cache read: category/4/name ({:expires_in=>300 seconds})

Cache fetch_hit: category/4/name ({:expires_in=>300 seconds})

Cache read: category/2/name ({:expires_in=>300 seconds})This may or may not improve performance as it’s doing a read for each separate product and having to communicate with Memcached for each one. It’s always best to measure extensively when doing any kind of performance optimization like this. This is a case where it might be better to do a multi-read to fetch all the category names in one go instead of fetching them separately for each product, especially if we’re running Memcached over a network.

In general it’s better to cache at a higher level such as in the view layer as it often results in less chatter. There are advantages to caching at a lower level like this, though, as we can then use the same cache multiple times in different views more easily. For example we have a show template which shows details for a single product including its category. With our model-level cache we can change this to use our category_name method so that it uses the same cache.

<p> <b>Category:</b> <%= @product.category_name %> </p>

Now when we visit the page for a product it won’t need to perform a database query to fetch the category name. Both of these techniques have their advantages; this is just another tool to add to our caching toolbelt.

Expiring The Cache With Callbacks

One potential problem with caching that category is what to do when the category name changes. The new name will be picked up in five minutes since the cache is set to expire then but if we want the change to be effective immediately we can add a callback to the Category model like this:

class Category < ActiveRecord::Base attr_accessible :name has_many :products after_update :flush_name_cache def flush_name_cache Rails.cache.delete([:category, id, :name]) if name_changed? end end

Now, after a category is updated the callback will be triggered and the cache item for that category’s name will be deleted, but only if the name attribute has actually changed. If we’re going to do something like this it’d a good idea to move the logic for this caching behaviour into a class method in the Category so that the key logic behaviour is all in the same location.

Deploying Memcached

Next we’ll show you how to deploy Memcached into production. First we’ll SSH into the Ubuntu 12.04 server that we’ve already set up.

$ ssh deployer@198.58.98.181

Once we’ve logged in installing Memcached is easy with apt-get.

# sudo apt-get install memcached

This will install and start Memcached. If we want to configure it we can find the configuration file at /etc/memcached.conf. The default options are pretty good but one setting that we might want to consider changing is the amount of memory that Memcached can use. This defaults to 64 megs but it’s worth bumping this up if you can spare the memory on the server.

# Start with a cap of 64 megs of memory. It's reasonable, and the daemon default # Note that the daemon will grow to this size, but does not start out holding this much # memory -m 64 # Specify which IP address to listen on. The default is to listen on all IP addresses # This parameter is one of the only security measures that memcached has, so make sure # it's listening on a firewalled interface. -l 127.0.0.1

Another important option is -l which can be set to restrict Memcached to accept only connections from the local machine. Memcached doesn’t offer much with regards to authentication but setting this option prevents the entire world from accessing it. It’s also a good idea to set up a firewall to make sure that Memcached’s port is closed. If we have a multi-server setup we’ll need to remove this line and handle the security elsewhere. We set up our server by using Capistrano recipes like we showed in episode 337 and here’s a recipe file for setting up Memcached.

set_default :memcached_memory_limit, 64 namespace :memcached do desc "Install Memcached" task :install, roles: :app do run "#{sudo} apt-get install memcached" end after "deploy:install", "memcached:install" desc "Setup Memcached" task :setup, roles: :app do template "memcached.erb", "/tmp/memcached.conf" run "#{sudo} mv /tmp/memcached.conf /etc/memcached.conf" restart end after "deploy:setup", "memcached:setup" %w[start stop restart].each do |command| desc "#{command} Memcached" task command, roles: :app do run "service memcached #{command}" end end end

This will automatically install Memcached using apt-get and set up a configuration template which we’ve also added to our application.

# run as a daemon

-d

logfile /var/log/memcached.log

# memory limit

-m <%= memcached_memory_limit %>

# port

-p 11211

# user

-u memcache

# listen only on localhost (for security)

-l 127.0.0.1This is similar to the default configuration file apart from the memcached_memory_limit variable which is set in the recipe. At the end of the recipe are stop, start and restart tasks for managing the daemon. If you use Capistrano to manage your server it’s easy to add this recipe and it should just work.

Configuring Dalli

We haven’t yet mentioned the different configuration options that we can pass in when we set up Dalli as the cache store. The README has a nice example of this. It’s a good idea to set up a namespace for the cache keys and we can also pass in a default expiration period and enable compression which is a good idea if we have a lot of large values and a limited amount of memory. There are a lot of other options that we can set and these are also documented in the README.

If you do want some kind of authentication with Memcached it’s worth looking at SASL which allows us to use a username and password with it.

For further information on Memcached it’s a good idea to take a look at its wiki. This is useful and well-written and will probably have the answer to any questions you have.