#252 Metrics Metrics Metrics

Metrics provide a great way to review code and find the parts of it that can be improved. One of the ultimate metric tools for Ruby is metric_fu, which was covered back in episode 166 [watch, read]. The only problem with it is that it can be a little difficult to set up as it has a large number of dependencies, especially when used in a Rails 3 application. A good alternative was the Caliper project which allowed you to plug in any Github repo and get metrics from it but unfortunately this has now closed down.

So we’re back to running metrics on our local machine and there’s now a gem called Metrical that makes this easier. Metrical uses metric_fu in the background but provides a nice wrapper command to make it easier to use.

We’ll be demonstrating Metrical by running it against the source code for the Railscasts website. First we’ll need to install the Metrical gem.

$ gem install metrical

Installing this gem will install a number of dependent gems. Once they’ve all installed we can run the metrical command from the application’s directory and this will run a number of metrics on the application’s code via metric_fu. When we do this we’ll probably see a number of errors listed in the output but for the most part we can ignore them as long as the command completes. We’ll know when it completes successfully as the results will open up in a browser.

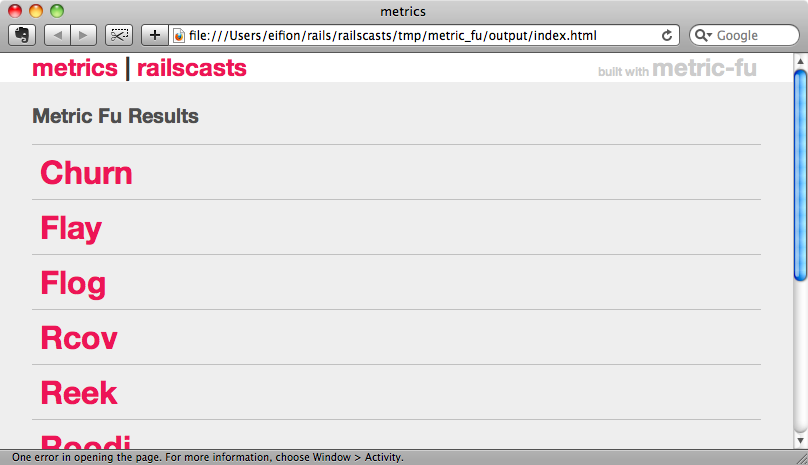

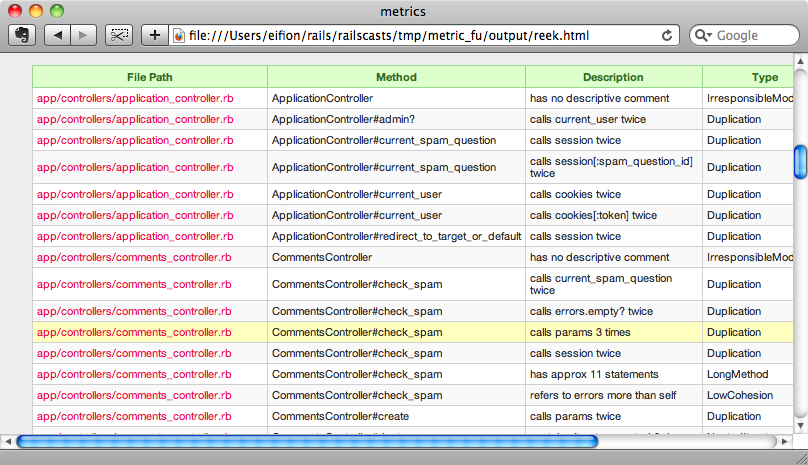

The first page lists the various metrics tools that metric_fu has run and we can click any one of these to see the results it has generated. For example if we look at Reek we’ll see a lot of useful feedback about the areas of the application that can be improved.

Next we’ll take a look at one of the other tools, Rcov. Of all the tools that Metrical runs, Rcov is the most likely to fail as it requires running the application’s tests and specs. If we run it on the Railscasts code it will show a coverage of 0.0%. The main problem here is that the code uses Ruby 1.9 which Rcov doesn’t yet support. Before we look into alternatives we’ll remove Rcov from our metrics. To configure metric_fu’s behaviour under Metrical we need to add a .metrics file to the application’s root directory.

MetricFu::Configuration.run do |config|

config.metrics -= [:rcov]

endWhen we run metrical now we’ll see fewer errors and the RCov section will be missing from the results. Code coverage is a useful metric to have though so what can be use instead of Rcov? The are a couple of alternatives we could use, CoverMe and SimpleCov. These both produce good results but we’re going to choose SimpleCov as it’s slightly easier to set up. First we need to add a reference to the gem in the Gemfile.

group :test do gem 'simplecov', '>=0.3.8', :require => false end

Next we’ll add the following two lines to the test or spec helper file.

require 'simplecov' SimpleCov.start 'rails'

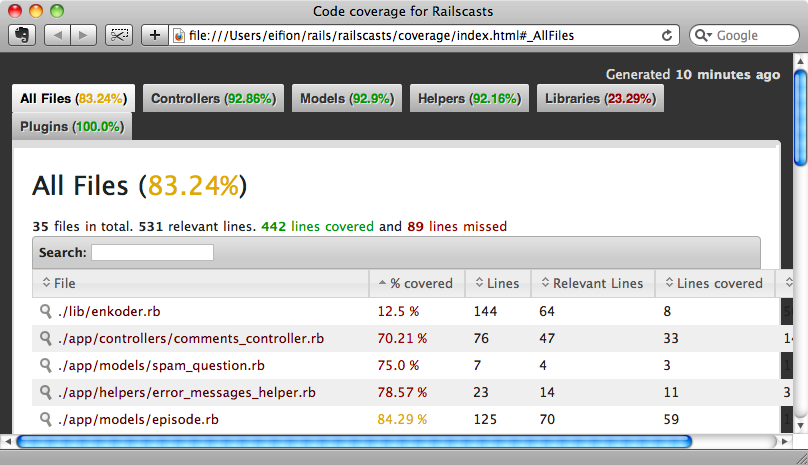

As ever, when adding a gem to an application we’ll need to run bundle to make sure that the gem is installed. After it has installed we can run rake spec to run the application’s specs and then open coverage/index.html to see the results.

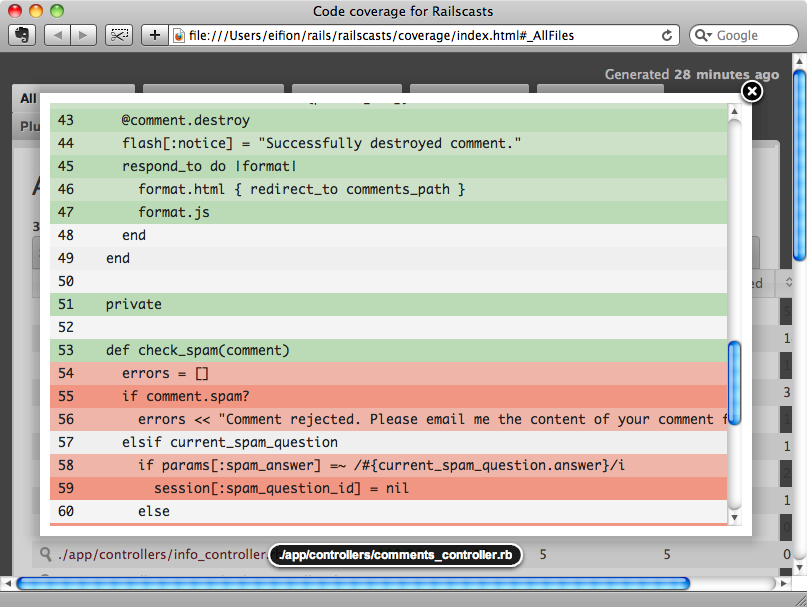

This gives us an easy way to see which files have the least code covered by tests or specs. The worst files are at the top and if we click on one of them, say the comments_controller, we can see exactly which code is or isn’t covered.

We can use this to see exactly which code isn’t covered and modify either the tests or the code to improve the coverage percentage.

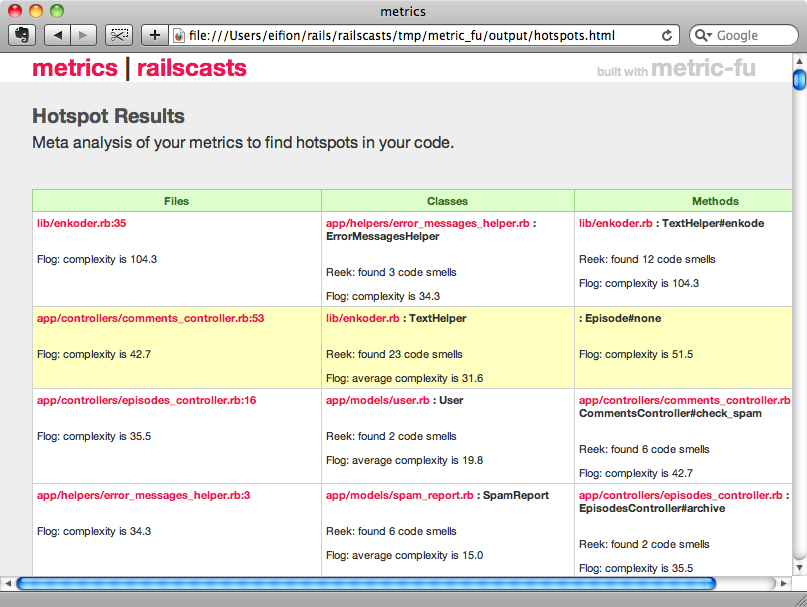

We have a good solution now for code coverage so we’ll look next at the two new metrics that metric_fu provides, the Best Practices Report and the Hotspots.

Hotspots gives you a generic overview of the various files, classes and methods in your application and lists the “worst” ones at the top. It takes several factors into account to determine the worst, primarily Reek and Flog, and is a good place to go to as a starting point to look at the places in your app that need the most attention.

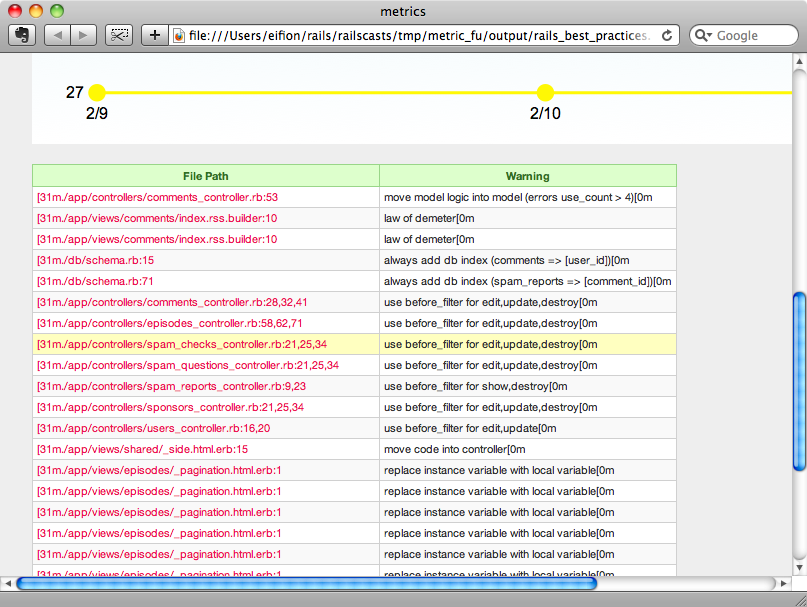

The Rails Best Practices report tells us of the places in our Rails app that don’t match best practices.

The output for this report looks a little odd when viewed in a browser. As an alternative we can run the gem directly instead of through metric_fu. As we’ve installed Metrical then we should already have the gem but if not we can install it by running

$ gem install rails_best_practices

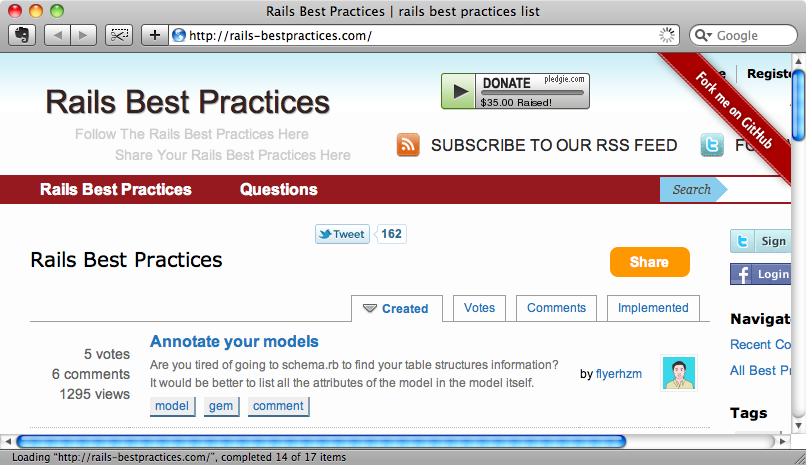

When we run the command above the output will suggest that we visit the Rails Best Practices website so let’s do that.

The Rails Best Practices website is great and has a long list of best practices to browse through and to use to improve our Rails applications. Under the “Implemented” tab are the practices that are used by the gem and these will be checked when we run the rails_best_practices command.

When we run this command we’ll get a list of suggestions returned in red.

$ rails_best_practices Analyzing: 100% |oooooooooooooooooooooooooooooooooooooooooo| Time: 00:00:00 <span style="color:#C00;">./app/controllers/comments_controller.rb:53 - move model logic into model (errors use_count > 4) ./app/views/comments/index.rss.builder:10 - law of demeter ./app/views/comments/index.rss.builder:10 - law of demeter ./db/schema.rb:15 - always add db index (comments => [user_id]) ./db/schema.rb:71 - always add db index (spam_reports => [comment_id]) ./app/controllers/comments_controller.rb:28,32,41 - use before_filter for edit,update,destroy ...</span>

The red text can be a little hard on the eyes. As an alternative we can specify that the output is in HTML and also make the file names links that open the relevant file in TextMate by adding some options.

$ rails_best_practices -f html --with-textmate

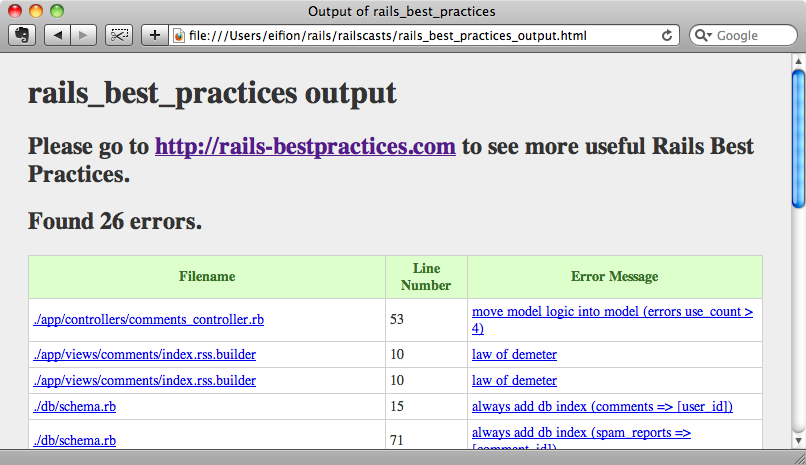

This will generate an HTML file called rails_best_practices.html that we can open in a browser.

The command found 26 errors in this application. If we click on any of the file names the relevant file will be opened in TextMate. Clicking on an error message will take us to a page on the Rails Best Practices site that gives us a description of the relevant problem and how we can refactor our code and improve it. Now all we have to do is work through the list and see how we can improve our code.

As with all metrics these recommendations shouldn’t be taken as gospel. Just because a particular technique is considered a best practice in general that doesn’t mean that it’s best for our application. We should take the list that’s generated as a general guideline to find the places where our application could be improved. Getting the list down to zero shouldn’t be our goal. The same can be said for all of the metrics in the list. Don’t take all of the suggestions on board without giving them some thought as to whether they’re best for your application, make sure that each suggestion did improve your application’s code. Metrics should be used as hints as to how your code could be improved.